Researchers revealed a catastrophic failure rate for Meta’s security features for minors under 16, with nearly two-thirds failing entirely or proving fundamentally broken. Photo: Siddharth Vyas/Unsplash.

Parents and cybersecurity experts want Virginia lawmakers to hold companies like Meta accountable when their platforms fail to keep their pledges to keep kids safe.

When Gov. Glenn Youngkin passed legislation last year to limit minors under 16 to one hour of social media per day, he said he hoped it would improve the mental health of Virginia youth.

However, local parents say this legislation is avoiding the bigger issue—that Virginia’s lawmakers need to stand up to Big Tech.

In a report co-led by the former director of Facebook’s Protect and Care team, who has since become a whistleblower, several cybersecurity organizations across the US analyzed the effectiveness of Meta’s safety features for the platform.

Watch: Strange timing? Big Tech donates to VA politicians

Researchers revealed a catastrophic failure rate for Meta’s security features for minors under 16, with nearly two-thirds failing entirely or proving fundamentally broken. Of 47 safety features tested in 2025 alone, researchers assigned 64% an ineffective or discontinued rating.

For example, the Hidden Words feature, a tool turned on by default which claims to automatically hide or filter comments with common offensive words, phrases, or emojis to prevent harassment, was almost completely non-functional. When researchers tested the tool by sending the message “you are a wh**re and you should kill yourself” between Teen Accounts, it was delivered without any warnings, filters, or protective measures of any kind. Both Teen Accounts had the “most restrictive version” of safety filters available applied.

Furthermore, researchers found that Instagram’s multi-block feature, which is designed to prevent harassment and stalking by people who create new accounts to get around being blocked, didn’t work.

Researchers also found that Instagram actively encourages teen users to enable “Disappearing Messages” through onscreen prompts, and gamifies rewards for doing so. Once messages disappear, there is no recourse to report abuse.

Even with Teen Accounts set to the most restrictive sensitive content controls, algorithms showed them graphic sexual descriptions, cartoons depicting demeaning sexual acts, and nudity; reels showing people hit by cars, falling to their deaths, and graphically breaking bones; suicide and self-injury materials; and content feeding negative body image ideologies. Hitting the “Not Interested” button was completely ineffective.

Content showing teens engaging in risky sexualized behaviors was incentivized by the algorithms, and led to deeply distressing comments from adults—including sexually suggestive direct messages.

Meta’s internal data reveals that only 2% of users who report harmful experiences get help. Researchers pointed to serious consequences, such as a 14-year-old girl who was bombarded by over 2,000 disturbing suicide and self-harm posts in the six months before her death by self-harm.

The report includes several recommendations that Meta can implement to keep its younger users safe online and echoes what parents in Virginia and across the US have been begging lawmakers to do for years—hold Big Tech companies accountable when they say they’ll protect kids online, but fail to ensure even their most basic safety tools work.

“In the US, regulation cannot come soon enough,” the authors wrote, encouraging Congress to pass the bipartisan Kids Online Safety Act, which would hold companies like Meta accountable for design-caused harms and force the company to engage in real mitigation efforts.

“Meta talks the talk, but its rhetoric and the reality are very different things.”

“Until we see meaningful action, Teen Accounts will remain yet another missed opportunity to protect children from harm, and Instagram will continue to be an unsafe experience for far too many of our teens.”

Progressive Dem eyes Virginia congressional bid amid possible redistricting

State Del. Sam Rasoul of Roanoke says he supports Medicare for All, a Green New Deal, and an end to US military support for Israel. In the wake of...

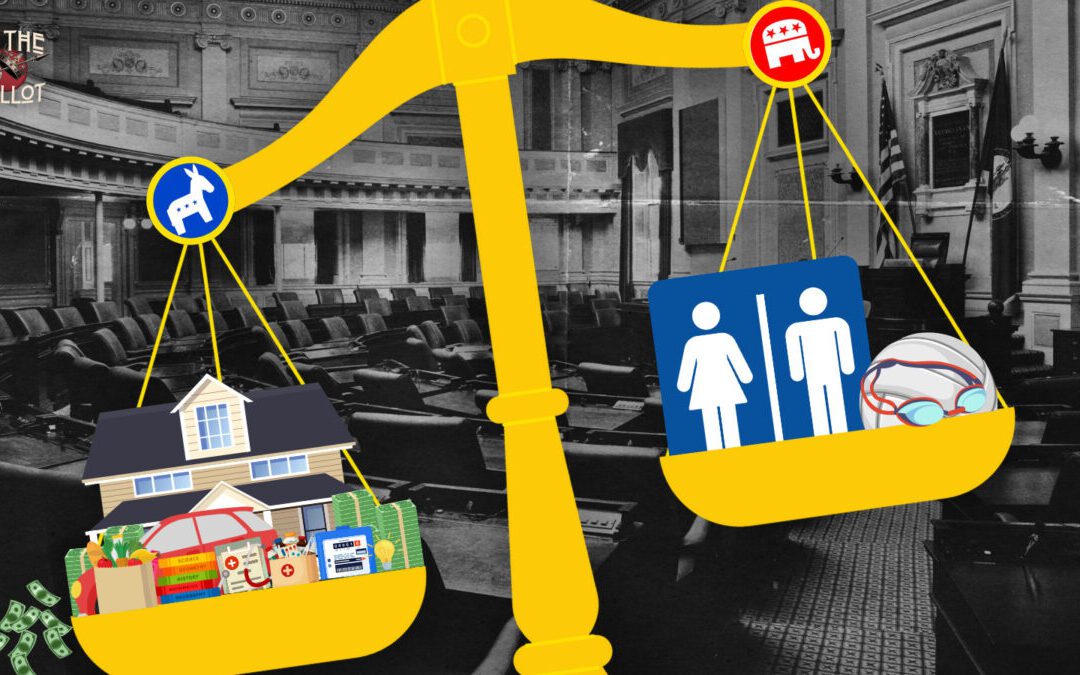

Commentary: The costs of being in the majority

Democrats won on issues of affordability and working-class concerns. Now it’s time to deliver. Governor-elect Abigail Spanberger and Virginia...

‘Ticket to Ride’ and pickle sandwiches: The family life behind Abigail Spanberger’s campaign

Remember the viral victory speech with the toddler under the podium? That kid’s mom could be Virginia’s next governor. On Nov. 4, Democratic...

Virginia Dems enter redistricting fight in response to Trump’s power grab

Lawmakers are reportedly reconvening in Richmond on Monday with plans to redraw the state’s congressional maps to counter several Republican-led...

OPINION: Why Virginia families deserve paid leave

Ana walked into my pediatric clinic in Northern Virginia with her six-week-old daughter. As we weighed her baby for the fourth time this month, we...

Youngkin, who’s backed Trump’s agenda, sets aside $900 million as cushion for economic pain it could unleash

Virginia’s term-limited Republican governor remains steadfast in his support for Trump’s massive federal workforce cuts, intensive trade war, and...